ChatGPT-5 and the end of naive AI futurism

Turns out, scale is not all you need

First published at The Future, Now and Then

OpenAI’s release GPT5 feels like the end of something.

It isn’t the end of the Generative AI bubble. Things can, and still will, get way more out of hand. But it seems like the end of what we might call “naive AI futurism.”

Sam Altman has long been the world’s most skillful pied piper of naive AI futurism. To hear Altman tell it, the present is only ever prologue. We are living through times of exponential change. Scale is all we need. With enough chips, enough power, and enough data, we will soon have machines that can solve all of physics and usher in a radically different future.

The power of the naive AI futurist story is its simplicity. Sam Altman has deployed it to turn a sketchy, money-losing nonprofit AI lab into a ~$500 billion (but still money-losing) private company, all by promising investors that their money will unlock superintelligence that defines the next millennium.

Every model release, every benchmark testing success, every new product announcement, functions as evidence of the velocity of change. It doesn’t really matter how people are using Sora or GPT4 today. It doesn’t matter that companies are spending billions on productivity gains that fail to materialize. It doesn’t matter that the hallucination problem hasn’t been solved, or that chatbots are sending users into delusional spirals. What matters is that the latest model is better than the previous one, that people seem to be increasingly attached to these products. “Just imagine if this pace of change keeps up,” the naive AI futurist tells us. “Four years ago, ChatGPT seemed impossible. Who knows what will be possible in another four years…”

Naive AI futurism is foundational to the AI economy, because it holds out the promise that today’s phenomenal capital outlay will be justified by even-more-phenomenal rewards in the not-too-distant future. Actually-existing-AI today cannot replace your doctor, your lawyer, your professor, or your accountant. If tomorrow’s AI is just a modest upgrade on the fancy chatbot that writes stuff for you, then the financial prospects of the whole enterprise collapse.

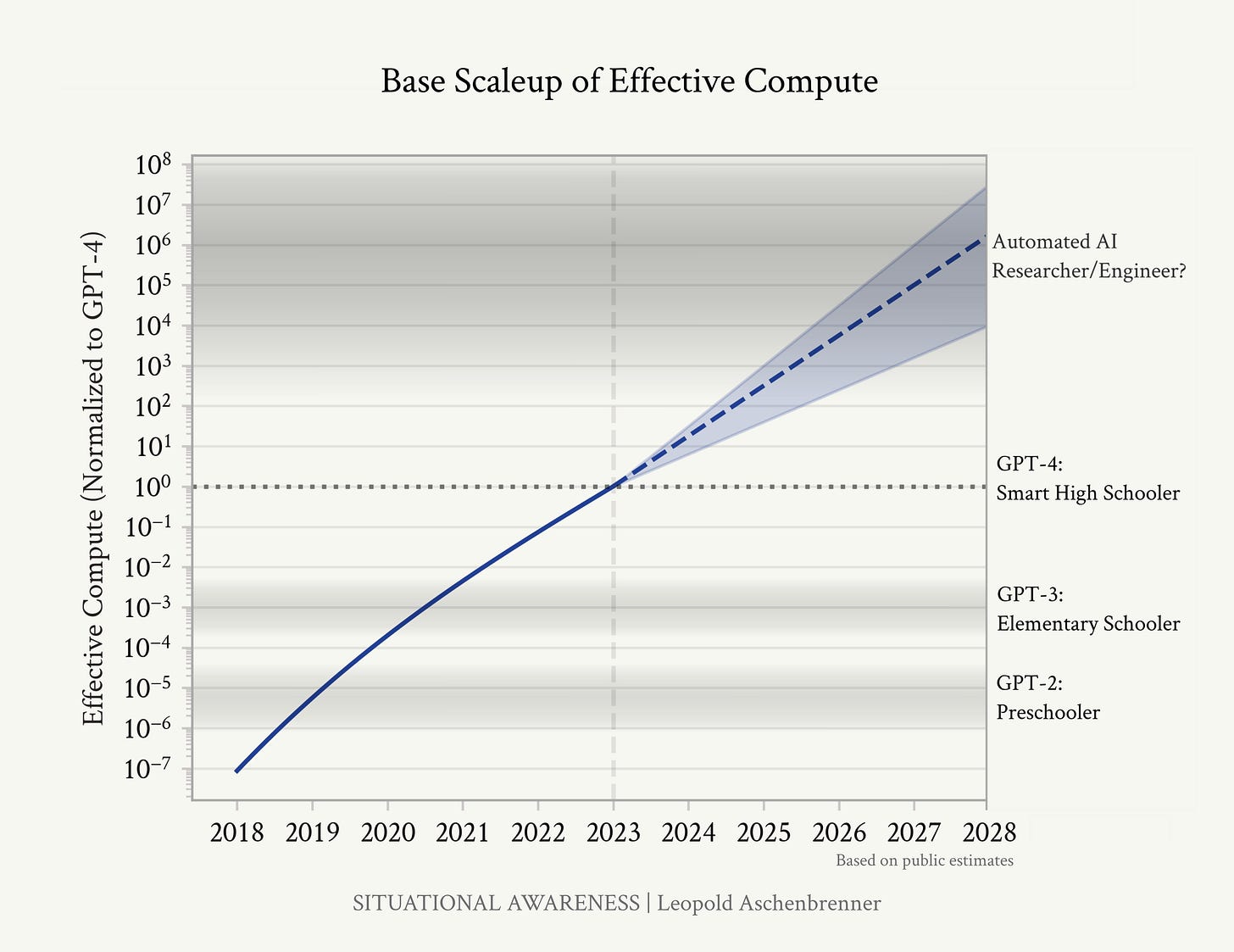

And so we have been treated to years of debates between AI boomers and AI doomers. They all agree that our machine overlords are on the verge of arrival. They know this to be true, because they see how much better the models have gotten, and they see no reason it would slow down. Leopold Aschenbrenner drew a lot of attention to himself in 2024 by insisting that we would achieve Artificial General Intelligence by 2027, stating, “[it] doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph.” More recently, the AI 2027 boys declared that we had about two years until the machines take over the world. Their underlying reasoning can be summed up as “well, GPT3 was an order of magnitude better than GPT2. And GPT4 is an order of magnitude better than GPT3. So that pace of change probably keeps going into infinity. The fundamental scientific problem has been cracked. Just add scale.”

The most noteworthy thing about the public reaction to GPT5 (which Max Read summarizes as “A.I. as normal technology (derogatory)” is that it blew no one’s mind. If you find ChatGPT to be remarkable and life changing, then this next advance is pretty sweet. It’s a stepwise improvement on the existing product offerings. But it isn’t a quantum leap. No radical new capabilities have been unlocked. GPT5 is to GPT4 as the iPhone16 is to the iPhone 15 (or, to be charitable, the iPhone 12).

And that’s a problem for OpenAI’s futurity vibes. The velocity of change feels like it is slowing down. And that leaves people to think more about the capabilities and limitations of actually-existing AI products, instead of gawking at imagined impending futures.

Here’s a visual example. Take a look at this graph:

In the parlance of Silicon Valley, this is the classic “hockey stick” graph. The number! It goes up! Just look at that rate of change and extrapolate forward. It represents a company/product/technology that is going from zero to one. Exponential growth = unlocked. Keep it going. The world (or some piece of it, at least) is about to change.

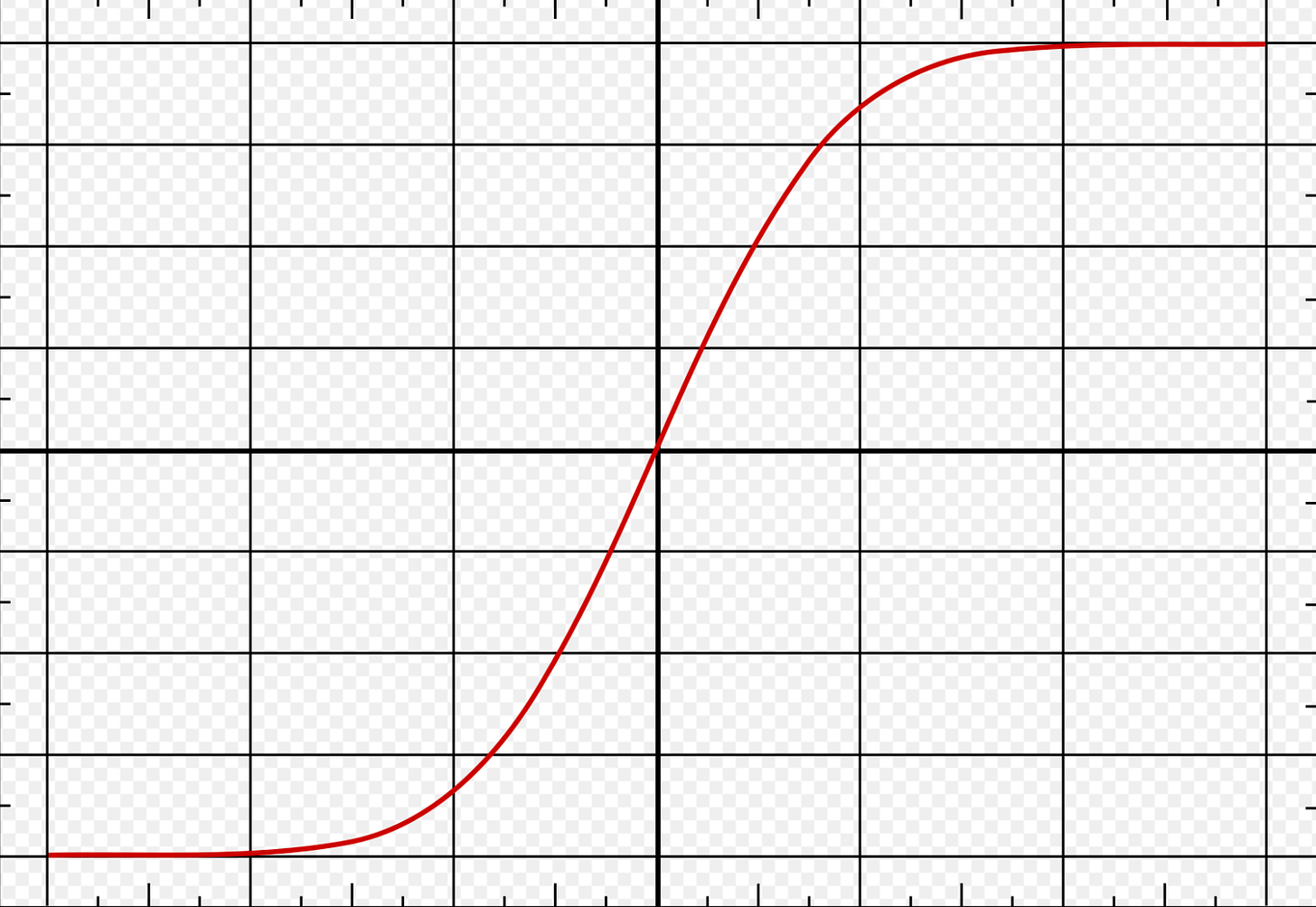

Now let me zoom out a bit:

Oh. The first graph was just the bottom-left quadrant of an S-curve. There was a phase of low growth, then a phase of rapid growth, and then the inevitable slowdown. This isn’t an exponential growth story we’re telling. It’s a standard diffusion-of-innovation story. The future isn’t radically changing. We’re just witnessing the uptake and social discovery of some new product line.

Sam Altman had dinner with a group of journalists last night, in an attempt to paper over GPT5’s clumsy rollout. Casey Newton provides the highlight reel. Two of his bullet points jumped out at me:

OpenAI is maybe basically profitable at the inference level. One of the funnier exchanges of the evening came when Altman said that if you subtracted the astronomical training costs for its large language models, OpenAI is "profitable on inference." Altman then looked to Lightcap for confirmation — and Lightcap squirmed a little in his seat. "Pretty close," Lightcap said. The company is making money on the API, though, Altman said. […]

OpenAI plans to continue spending astronomical amounts. Eventually, he said, OpenAI will be spending "trillions of dollars on data centers." "And you should expect a bunch of economists to wring their hands and be like, 'this is so crazy, it's so reckless," he said. "And we'll just be like, you know what? Let us do our thing, please."

Notice the gulf between those two statements. (1) The company is close to break-even on the one part of the business that generates revenue. (2) It plans to keep raising trillions of dollars from investors to spend on its broader ambitions.

…Look, I am not a business genius. But as I understand it, the point of a business plan is to eventually make more money than you are spending. If your company is break-even on merchandise, but losses billions per year on the rest of the product line, then that’s a company that is supposed to, y’know, fail.

The naive AI futurism story is how Altman brings in the cash for those trillion-dollar data centers. Investors have to believe that we are living through exponential times, that the next product release will fundamentally change life as we know it. Otherwise they’re just keeping the bubble afloat. And that story just became a lot harder to sell.

I still stand by my initial reaction thread, which I posted to Bluesky last week:

The release of GPT5 is giving off strong Apple Vision Pro vibes. There was like a full year where critics of VR/AR/XR headsets were cautioned “just wait for Apple to make its move. Whatever they release will be a step-change. The present is just prologue.”

What Apple released was technically very impressive. But it was also immediately clear that it solved none of the problems that critics had been pointing out for so long. And the question then became “by what standard should we judge this?”

If you compare the Apple Vision Pro to the Meta Quest 3, then holy shit it is so impressive. If you compare it to *what the boosters were promising for an entire year prior* then it’s a huge disappointment.

Ethan Mollick, one of Generative AI’s biggest boosters, heralded GPT5 as a triumph. His review gawked at its beautiful prose, and then parenthetically remarked “(remember when AI couldn't count the number of Rs in " ‘strawberry’? that was eight months ago).”

It turns out that GPT5 can’t count the Bs in “blueberry.” Oh, and it STILL hallucinates (Mollick and other boosters used to insist that was a temporary glitch that would surely be solved by now)! BUT if you compare it to GPT4, it is more impressive.

My main prediction is that (1) the critics will spend the next few months noting that GPT5 doesn’t come close to the capabilities that its boosters spent two years insisting were imminent, and (2) the boosters will focus on how GPT5 is better than 4, and insist everyone should shut up and applaud.

Tech boosters have the memories of goldfish. So I want to state this very clearly, and in all caps:WE AREN’T HOLDING THIS TECHNOLOGY TO SOME ARTIFICIAL, IMPOSSIBLE STANDARD. WE ARE JUST ASKING WHETHER IT DOES THE THINGS THAT YOU BOOSTERS LOUDLY INSISTED IT WOULD DO. WE ARE HOLDING IT TO THE STANDARDS YOU SET OUT.

So that’s the take that I’m registering at this point. I don’t think we’ve quite seen the end of the Generative AI bubble. But I think this blew a hole in the naive AI futurism narrative, and we’ll eventually find that it was a load-bearing narrative.

I don’t think this takes us all the way to another “AI Winter” scenario. But I do suspect we’re going to start evaluating generative AI tools on the basis of what they actually do, instead of what they might someday become.