Episode Summary

Less than five years ago, studying online rumors and misinformation wasn’t a controversial job. It most definitely is now, however, thanks to a powerful group of reactionary politicians and activists who have realized that improving the quality of our political discourse has a negative effect on their electoral chances. We now live in a social media environment in which everything from harmless speculation to flagrant lying isn’t just permissible, it’s encouraged—especially on X, the badly disfigured website formerly known as Twitter.

My guest in today’s episode, Renée DiResta, saw all of this happen in real time, not just to the public discourse, but to herself as well after she became the target of a coordinated smear campaign against the work that she and her colleagues at the Stanford Internet Observatory were doing to study and counteract internet falsehoods. That was simply intolerable for Jim Jordan, the Ohio Republican congressman who argues that organizations seeking to improve information quality are really a “censorship industrial complex.”

Under significant congressional and legal duress, SIO was largely dissolved in June, a significant victory for online propagandists. Beyond their success at effectively censoring a private organization they despised, Jordan and his allies have also intimidated other universities and government agencies that might dare to document and expose online falsehoods.

Even if Jordan and his allies had not succeeded against SIO, however, it’s critical to understand that misinformation wouldn’t exist if people did not want to believe it, and that politicized falsehoods were common long before the internet became popular. Understanding the history of propaganda and why it’s effective is the focus of DiResta’s new book, Invisible Rulers: The People Who Turn Lies Into Reality, and it’s the focus of our discussion in this episode.

The video of this discussion is available. The transcript of audio is below. Because of its length, some podcast apps and email programs may truncate it. Access the episode page to get the full text.

Related Content

Why misinformation works and what to do about it

Renée DiResta’s previous appearance on Theory of Change

How and why Republican elites are re-creating the 1980s “Satanic Panic”

Big Tobacco pioneered the tactics used by social media misinformation creators

Right-wingers depend on disinformation and deception because their beliefs cannot win otherwise

Why people with authoritarian political views think differently from others

How a Christian political blogger inadvertently documented his own radicalization

The economics of disinformation make it profitable and powerful

Audio Chapters

0:00 — Introduction

03:58 — How small groups of dedicated extremists leverage social media algorithms

09:43 — “If you make it trend, you make it true”

13:32 — False or misleading information can no longer be quarantined

21:31 — Why social influencer culture has merged so well with right-wing media culture

26:50 — America never had a '“shared reality” that we can return to

30:05 — Reactionaries have figured out that information quality standards are harmful to their factual claims

32:22 — Republicans have decided to completely boycott all information quality discussions

40:04 — Douglas Mackey and what trolls do

43:30 — How Elon Musk and Jim Jordan smeared anti-disinformation researchers

51:10 — Conspiracy theories don't have to make sense because the goal is to create doubt

53:40 — The desperate need for a “pro-reality” coalition of philanthropists and activists

58:30 — Procter and Gamble, one of the earliest victims of disinformation

01:01:00 — The covid lab leak hypothesis and how content moderation can be excessive

01:13:07 — JD Vance couch joke illustrates real differences between left and right political ecosystems

01:19:26 — Why transparency is essential to information quality

Audio Transcript

The following is a machine-generated transcript of the audio that has not been proofed. It is provided for convenience purposes only.

MATTHEW SHEFFIELD: So, your book and your career has upset a lot of people, I think that’s fair to say. And I guess maybe more your career more recently. So, for people who aren’t familiar with your story, why don’t you maybe just give a little overview?

RENEE DIRESTA: So yeah, I guess I’m trying to think of where to start. I spent the last five years until June of this year at the Stanford Internet Observatory. I was the technical research manager there. So [00:03:00] I studied what we call adversarial abuse online. So understanding the abuse of online information ecosystems different types of actors, different types of tactics and strategies, sometimes related to trust and safety, sometimes related to disinformation or influence operations, sometimes related to generative AI or new and emerging technologies.

And We treated the internet as a holistic ecosystem. And so, our argument was that as new technologies and new actors and new entrants kind of came into the space you’d see kind of cascading effects across the whole of social media. A lot of the work that I did focused on propaganda and influence and influencers as a sort of linchpin in this particular media environment.

And so I recently wrote a book about that.

SHEFFIELD: And you’ve got into all this based on your involvement in being in favor of vaccines.

DIRESTA: Yeah, yeah. I know I got into it by like fighting on the internet, I [00:04:00] guess, back in 2014 no, I was I had my first kid in 2013, my son and I was living in San Francisco.

I’d moved out there about two years prior. And you have to do this thing in San Francisco where you put your kid on all these like preschool waiting lists. And not even like fancy preschool, just like any preschool, you’ve got to be on a waiting list. And so I was filling out these forms and I started trying to Google to figure out where the vaccine rates were because at the time there was a whooping cough outbreak in California.

And there were all of these articles about how, at the Google daycare vaccination rates were lower than South Sudan’s verbatim. This was one of the headlines. And I thought, I, I don’t want to send my kid to school in that environment. And I want to send him to a place where I’m not risking him catching easily preventable diseases.

This is not a crazy preference to have it turns out, but it was on the internet. And so I got really interested in, in that dynamic and how I felt like. My desire to keep my kid safe from preventable diseases [00:05:00] had been a accepted part of the social contract for decades. And all of a sudden I have a baby and I’m on Facebook and Facebook is constantly pushing me anti vaccine content and anti vaccine groups with hundreds of thousands of people in them.

So I started joining some of the groups because I was very curious, like what happens in these groups? Why are people so, so compelled to be there. And it was very interesting because it was It was like, it was like a calling, right? They, they weren’t in there because they had some questions about vaccines.

They were in there because they were absolutely convinced that vaccines caused autism, caused SIDS, caused allergies, and all of these other, lies, candidly, right? Things that we know not to be true, but they were really evangelists for this. And there was no, Counter evangelism, right? There were parents like me who quietly got our kids vaccinated, nothing happened, and we went on about our days.

And then there were people who thought they had a bad experience or who had, distrust in the government, distrust in science. And they were out there constantly putting out content about [00:06:00] how evil vaccines were. And I just felt like there was a real asymmetry there. Fast forward, maybe. A couple months after I started looking at those daycare rates and lists and preschool and there was a measles outbreak at Disneyland.

And I thought, Oh my God, measles is back, and measles is back in California in in, in 20, 2014. So that was where I started feeling like, okay we could take legislative steps to improve school vaccination rates and I wanted to be involved. And I met some other very dedicated, mostly women who did as well.

And we started this organization called Vaccinate California. And it was really. The mission was this vaccine kind of advocacy, but the learnings were much more broadly applicable, right? It was, how do you grow an audience? How do you add target? How do you take a couple thousand dollars from like friends and family and, Put that to the best possible use to grow a following for your page.

How do you get people to want to share your content? What [00:07:00] content is the kind of content you should produce? Like what is influential? What is appealing? And so it was this, kind of building the plane while you’re flying it sort of experience. I was like, Hey, everybody on Twitter is using bots.

This is crazy. Do we need bots? All right, I guess we need bots, and and just having this experience and um, 2015 or so is when all this was going down. And we did get this law passed this, this kind of pro vaccine campaign to improve school vaccination rates. It did pass, but I was more struck again by what was possible.

And by a feeling of I guess, alarm at some of the things that were possible, right. That you could add target incredibly granularly with absolutely no disclosure of who you were or where your money was coming from that you could run automated accounts to. Just to harass people, right? To kind of constantly barrage or post about them that none of this was in any way certainly wasn’t illegal, but it wasn’t even really like immoral.

It was [00:08:00] just. A thing you did on the internet and there were other things around that time, right? Gamergate happened around the same time. Isis grew big on social media around the same time. And so, I just felt like, okay, here’s the future. Every, every campaign going forward is going to be just like this.

And I remember talking about it. With people at the CDC, right? They asked us to come down and present at this conference, made a whole deck about what we had done. And, this pro vaccine mom group and was really struck by how like unimpressed they were by the whole thing, not. That we felt like we should be getting like kudos for what we had done, but that they didn’t see it as a battleground, that they didn’t see it as a source of influence, as a source of where public opinion was shaped, that they didn’t see groups with hundreds of thousands of people in them as a source of alarm.

And the phrase that they said that I referenced in the book a bunch of times is these are just some people online, right? So there’s a complete. [00:09:00] Lack of foresight of what was about to happen because of his belief that institutional authority was enough to carry the day. And my sense that it was not

SHEFFIELD: well, and also that they did not understand that.

A, I mean, I think we, we have to say that at the outset that while you were encountering all this content, anti vaccine content, that that’s not what the majority opinion was. The majority opinion was pro vaccine and and, and, and so, yeah, with these bots and all these other different tactics of, agreeing to share.

Mutual links and whatnot. They’re able to make themselves seem much more numerous than they really are.

DIRESTA: Yep.

“If you make it trend, you make it true”

SHEFFIELD: And, and then eventually then the algorithm kicks in and then that promotes the stuff also. And, and you have a phrase which you have used in the book and used over the years, which I like, which is that if you, if you make it trend, you make it true.

So what does that mean? [00:10:00] What does that mean?

DIRESTA: So I, it was almost a um, you know, you essentially create reality, right? This is the thing that we’re all talking about. This is the thing that everybody believes. Majority illusion is what you’re referencing there, right? You make yourself look a lot bigger than you are.

And algorithms come into play that help you do that. And in a way, it becomes it becomes something of a self fulfilling prophecy. So the, there’s a certain amount of activity and energy there. The thing gets, the content, whatever it is, gets engagement, the hashtag trends. There is so much effort that was being put in in the 2014 to 2016 or so timeframe into getting things trending because it was an incredible source of like attention capture.

You were putting an idea out there into the world, and if you got it done with just enough people, It would be pushed out to still more people. The algorithm would kind of give you that lift and then other people would click in and would pay attention. And so there was a real um, means [00:11:00] of galvanizing people by calling attention to your cause and making them feel kind of compelled to join in.

So one of the things that we saw, I’m trying to remember if I even referenced this part in the book, was the the ways in which you could like connect different factions on the internet. I have a graph of this that I show in a lot of talks where what you see is like the very long established anti vaccine communities in 2015, the people who’d been on their, their screaming about vaccines causing autism for years.

They didn’t get a lot of pickup with that narrative. There were still enough people who were like, no, I don’t know. Like the researchers over here, this is what it says. And you see them pivot the frame of the argument after they lose a couple of couple of votes in the California Senate, I think it was.

And you see them move from talking about the sort of health impacts, the sort of the things that we call misinformation, right? Things that are demonstrably false. You see them pivot instead to a frame about rights, about health, freedom, medical freedom. [00:12:00] And you see them they call it, Marrying the hashtags is the language that they use when they’re telling people on their side to do this, which is to tweet the hashtag for the bill, some of the vaccine hashtags, and then they say, tag in, you might remember this one TCO T top conservatives on Twitter or a hashtag to a, which is the second amendment people, for a long time before the first amendment became the be all end all on Twitter, it was actually the second amendment.

That was the thing that, that you would see kind of conservative factions fighting over the days of the tea party. And so you saw the anti vaccine activists, again, these people who have this deeply held belief in the health lies, instead pivoting the conversation to focus instead on, okay, it doesn’t matter what the vaccines do, you shouldn’t have to take them.

And that becomes the thing that actually enables them to really kind of grow this big tent. And you see the movement begin to expand as they start making this appeal to more sort of a, libertarian or tea party politics. That was that was [00:13:00] quite prominent on, on Twitter at the time. So essentially that is how they managed to grow the So the make a trend, make a true thing was just if you could get enough people paying attention to your hashtag, your rumor, your content, nobody’s going to see the counter movement, the counter fact check, the thing that’s going to come out after the trend is over.

It’s the trend that’s going to capture attention, stick in people’s mind and become the thing that they that they think about as a, reality when they’re referencing the conversation later.

False or misleading information can no longer be quarantined

SHEFFIELD: Well, and the reason for that is this other idea of which you talk about, which is that media is additive.

That when people think that, well, I, I can somehow stop bad views from being propagated on the internet. Like, that’s the idea. That’s not true. And, and it, and it never, and it never was. And like you, I mean, you can definitely lessen the impact because I mean, I think it is the case for instance, that Tucker Carlson, [00:14:00] once he was taken off Fox news, his influence has declined quite a bit.

And Milo Yiannopoulos, another example now, now he’s reduced to saying that he’s he’s an ex gay Catholic activist. That’s, I saw that go by.

DIRESTA: Yeah, no, I definitely saw that one go by. There’s one thing that happens though, where Twitter, Steps in at some point also, because they realize that a lot of the efforts to make things trend are being driven by bots and things like this, right?

By, by automated accounts. And so they come up with, at this point, rubrics for what they consider to be a low quality account is the term. And that has been, reframed now and the political, the sort of political polarized arguments about social media, but the low quality account Vision, that, that went into it was this idea that they wanted trends to be reflective of real people and real people’s opinions.

And so you do see them trying to come in and filter out the the accounts that appear to be there, like [00:15:00] to spam or to participate in spamming trends and things like this. And so Twitter tries to correct for this for a while in the 2017 to 2019 timeframe, it’s unclear. What has happened since?

SHEFFIELD: Yeah, well, and, and, and I mean, and this, the idea of, how to adjust algorithms, I mean, that is kind of one of the perpetual problems of, of all social media is, and no, I feel like that the people who own these platforms and run them, they don’t want to admit that.

Any choice, any, in, in algorithm, whether it’s, reverse chronological per, accounts mutuals interest or whatever, whatever their metric is. It is a choice and it is a choice to boost things. And it seems like a lot of people in tech, they don’t, they don’t want to think that they’re doing a choice.

DIRESTA: I [00:16:00] think now,

SHEFFIELD: now,

DIRESTA: now if you talk to people who work on social media platforms, I don’t think that that’s a controversial thing to say anymore. I think in 20 again, 2016, 2017 timeframe. There was this idea that there was such a thing as a neutral, right? That, that there was a Magical algorithmic, pure state of affairs.

And and this led to some really interesting challenges for them because they ultimately were creating an environment where whoever was the best at. Intuiting what the algorithm wanted and creating content for it could essentially level up their views. So one of the things that happened was Facebook launches this feature called the watch tab. They do it to compete with YouTube and they don’t have the content creator base of YouTube.

So there is a bunch of new content creators who begin to try, who [00:17:00] realize that Facebook is aggressively promoting the watch tab. So they’ve created an incentive and then the watch tab is going to surface certain types of content. So you see these accounts trying to figure out what the watch tab wants to recommend, what it’s, what it’s geared to recommend.

And they evolve over time to creating these kinds of content where the headline, like the sort of video title is what she saw when she opened the door, right. Or you’ll never believe what he thought in that moment, these sorts of like clickbait clickbait titles. But it works and the watch tab is pushing these videos out.

They’re all about like 13 to 15 minutes long, right? So these videos really require a lot of investment and the audience is sitting there like waiting for this payoff, is like, what happens when she opens the door? I don’t know. She hasn’t opened the door yet. And you just sit there and you wait and you wait and you watch and you watch.

And so it’s, the algorithm thinks this is fantastic, right? It’s racking up watch minutes. People are staying on the platform. The creators are earning tons of [00:18:00] money there. This is being pushed out to literally millions of people, even if the pages only have about 10, 000 followers. And so you watch this entire ecosystem grow and it’s entirely like content produced solely to capture attention entirely to earn.

The revenue share that, that the platform has just made possible. So it’s like the algorithm creates an incentive structure and then the content is created to fill it. And the influencers that are best at creating the content can profit from it and maximize both their attention, right? The attention, the clout that they’re going to get from new followers and also financially to profit from it.

So unfortunately the the idea that there’s like some neutral. It’s just not exactly right. Even if you have reverse chronological, what you’re incentivizing is for people to post a whole lot. So they’re always at the top of the feed. So it’s just this idea that you’re always going to have actors responding to those incentives and this is just what we get on social media.

It’s not a. It’s not a political thing at all. It’s just, it’s just creators [00:19:00] meeting the, the, the rules of the game. Right.

SHEFFIELD: The algorithms of who was boosted or what was not boosted like people eventually started.

imbuing all kinds of decision, human decisions into algorithms also. Like, I see that all the time. People, they’re like, I’m, I’m shadow band or I’m this or that. And it’s like, and then you look at their. Posts and they’re just kind of boring, they’re just some links. And especially Twitter now, like they will penalize you if you’re not somebody that is regarded as a news source or something like that, whatever the term is they use, like you’re going to get penalized if you post a link in the post.

And, but a lot of people, they don’t know how the algorithms work. And, and so like, they think that they’re being deliberately individually suppressed. And that’s just not true.

DIRESTA: It’s, it’s weirdly. Were they narcissistic in a way I would see I would occasionally, so some of it is genuine lack of understanding.

I remember in 2018, [00:20:00] having conversations with people as the sort of shadow banning theories were emerging and asking folks, like, why do you think your shadow band? These were these accounts that had maybe a couple hundred followers, not very much engagement. They were not. Big, political power players or shit posters or anything, just kind of ordinary people.

And they would say like, well, my friends don’t see all of my posts. And that’s when you realize that there’s like a disconnect. They do not understand that algorithmic curation is the order of the day. And that whatever it is that you’re creating is competing with a whole lot of other, what other people are creating.

And, something somewhere is stack ranking all of this and deciding what to show your friends. And so they interpret it as somehow being sort of a, a personal ding on them. And the part that I always found sort of striking was when you had the influencers who know better, right. Who do understand how this works.

And the, the, they would use it as a as a monetization strategy as, as an audience [00:21:00] capture, sorry, not audience capture, but as a audience attention grab where they would say like, I, I am so suppressed. I am so suppressed. We, I only have 900, 000 followers. I remember after Elon took over Twitter, I thought, okay, maybe this is going to go away now, but it didn’t.

Then it turned into, Elon, there’s still ghosts in the machine. There’s still legacy suppression that’s happening. You need to get to the bottom of it. And so it was completely inconceivable to them that like some of their content just wasn’t that great or some of their content just wasn’t, where the algorithmic tweaks had gone.

Why social influencer culture has merged so well with right-wing media culture

DIRESTA: So there’s an interesting dynamic that happens on social media, which is that people see influencers as being distinct from media, right? They’re they present as just them. They’re not attached to a branded outlet.

Maybe they have a sub stack or something like that, but but they’re, they’re not seen as being part of a major media institution or brand. And so they’re seen as being more trustworthy, right? They’re just like me. They’re a person who is like me, who is out there sees the world the way I see it and what they have to say [00:22:00] resonates with me.

So it’s a different model. And one of the things that the influencer has to do is they’re, they’re constantly working to grow that relationship with their fandom. And what you start to see happen on social media is that this idea that they are somehow being suppressed, that their truths are somehow being prevented from reaching people.

It’s both a sort of an appeal for, for support, right? For support and validation from the audience. But it’s also, it also positions them as being somehow very, very important, right? So, I am so suppressed because I am so important. I am over the target. I am telling you what they don’t want you to know.

So that, that presentation kind of makes them seem more interesting. It increases their mystique. It increases their their clout, particularly among audiences who don’t trust media, don’t trust institutions, don’t trust big tech. So it becomes in a sense it’s almost a, a marketing [00:23:00] ploy for influencers to say, you should follow me because I am so suppressed.

SHEFFIELD: Yeah. Well, and, and it’s critical to note that this is also fitting into a much larger and longer lasting pattern that is existed on the political right. Since the

DIRESTA: idea that The mainstream media is biased against you and your truths. And so, here’s this here’s this alternative series of outlets.

And I think, the influencer is just one step in that, in that same chain, but what’s always interesting to me about it is the the presentation of themselves as distinct from media, even though they have the. reach of media. They are presenting themselves as an authoritative source of either information or commentary.

And so it’s, in the realm of the political influencer, especially it’s like media, but without the without the, the, the media brand, everything else is very much the same.

SHEFFIELD: It is. Yeah. And I mean, in a lot of ways, the talk radio is, is the model here that this is just. [00:24:00] A slightly different version of it.

And, and that’s why I do think that, like when you look at the biggest podcast or biggest, YouTube channels and whatnot, like they are almost overwhelmingly right wing because that’s the audience has been sort of, that’s what they expect. They’re used to sitting there and listening to somebody talk to them for three hours.

Where, whereas, on, on the left or center left, the things have to be a lot shorter. And they have to be conversations instead of monologues that are three hours long. But I mean, of course, but the other issue though, is that, I mean, there is an epistemic problem as well on the right wing in America.

I mean, it is the case that, I mean, William F. Buckley Jr. His first book, God, a man at Yale was about. How these professors were mean because they didn’t believe in the resurrection. They were mean because they taught evolution and they didn’t take seriously the idea [00:25:00] that maybe the earth was recreated in 6, 000 years.

Maybe that was why, why, why can’t we teach the controversy? We need to teach the controversy. Like to me, that’s kind of the original alternative fact is evolution. Is not true. Um, That was the whole

DIRESTA: thing. Do you remember that was I remember it would have been gosh, sometime in the 2014, 2015 timeframe, there was this paper that made the rounds where Google was trying to figure out how to assess questions of factuality.

Right. And I’m trying to remember what they called it. It had a name. It wasn’t like, Truth rank or something, maybe it was something maybe it was that actually but the media coverage of it, particularly on the right made exactly this point. Well, how old are they going to say the earth is, and that was like the big gotcha.

Um, And you weren’t

SHEFFIELD: there, Renee. So how do you,

DIRESTA: how do you, how do you even know scientists, fossils, who even knows, right. But the it was an interesting, It is sort of first [00:26:00] glimpse into at the time people were saying like, Hey, as more and more information is, sort of proliferating on the internet, how do you return good information?

And there was that Google knowledge, you started to see search results that returned, not just the list of results, but that had the answer kind of up there in the the, the sort of knowledge pain. And if you searched for age of the earth, it would give you the actual age of the earth.

And that became a source of some controversy. So one of the first kind of harbingers of what was going to happen as a social media platforms or search results for that matter, search engines tried to try to curate accurate information as there was a realization that perhaps surfacing accurate information was a worthwhile endeavor.

And now it sounds. Controversial to say that whereas 10 years ago it was seen as a, the normal evolution and helping, helping computers help you.

SHEFFIELD: ,

America never had a “shared reality” that we can return to

SHEFFIELD: I think it’s unfortunate that there is a lot of discourse about, we, we don’t have a shared reality anymore.

You don’t have, the [00:27:00] unfortunate problem is. We never had it. It’s just, it’s, it’s just like, for in astronomy, you can, a lot of times you can’t see planets because they don’t generate light or we can’t see neutron stars because they don’t generate light. Or very little. In essence.

These, these alternative realities, they were always there. People who work in academia or work in journalism or work in knowledge fields, didn’t know that they were there. I mean, it is the case that when you look at Gallup polling data, that 40 percent of American adults say that God created humans in their present form and that they did not evolve.

And only 33 percent say that humans evolved with God guiding the process. And then the smallest percentage, 22 percent says that humans evolved and God was not involved in that [00:28:00] process. There’s all kinds of things about that. You could illustrate that.

I mean, these opinions have always been out there, anti reality was always there. It’s just. Now it affects the rest of us is the problem.

DIRESTA: That’s it. That’s an interesting point. I think the I remember reading the evolution creation debates on the internet because they were, that was like the original source of, debating and fighting, and you could find that stuff on the internet and there were the the flat earthers, but that always seemed like a joke until all of a sudden one day it didn’t.

And yeah, the chemtrails the chemtrails groups. One thing I was struck by was when I joined some of those anti vaccine groups, the chemtrail stuff came next, the flat earth stuff, the 9 11 truth stuff. It was just this this entire yeah, conspiracy correlation matrix basically, that was just like, Oh, you like this.

You might also like this and this and this and this and this. And so is this a interesting glimpse into how these The sort of Venn diagrams and those different belief [00:29:00] structures. But one thing I think that was distinct is that it was the ones that in that required a deep distrust in government to really continue.

So I think that that was also different. I don’t think, you would see the evolution debate come up in the context of what should we put in the textbooks, right? This teach the controversy thing that you referenced. But it, it didn’t. It seems like that existed in a time when trust in government was still higher.

Now you have, I think a lot more conspiracy theories that are really where the belief seems more plausible because people so deeply distrust government. And so that, that cycle has been happening. And when you speak about the impact it has, it does then interfere with governance and things like that in a distinctly different way.

Then it maybe seemed like it did, two decades ago.

SHEFFIELD: Yeah. And at the same time, I mean, Margaret Thatcher had her famous saying what she said twice in the interview that there’s no such [00:30:00] thing as society. It’s we’re, we’re just a bunch of individuals. That’s all that we are.

Reactionaries have figured out that information quality standards are harmful to their factual claims

SHEFFIELD: And yeah, I mean, like, but you’re right. I think that it obviously has come. Has gotten more pronounced and this skepticism has become more of a problem. And, but it’s become a problem also in the, in the social media space, because. Trying to do anything to dial back falsehoods even flagrant ones, dangerous ones that are, will cause people to die.

That’s controversial now. And it, and it wasn’t. And, and you yourself, We’re have experienced that, haven’t you?

DIRESTA: Well, I mean, it, it was a very effective, I think, kind of grievance campaign. I remember the, Donald Trump was It was in office and there was this, do you remember that form?

They put up like a web form. Have you been censored on social media? Let us know. Yeah. And and then they, I think they got a, that was the

SHEFFIELD: fundraising boy. They got a [00:31:00] bunch of

DIRESTA: dick pics. Yeah, exactly. dick pics and an email list. But the, because it wanted like screenshots of evidence, which I thought was almost like.

Sweet in how it did not understand what was, I wasn’t gonna come.

SHEFFIELD: The post is deleted. You’re not gonna see it.

DIRESTA: But that, that kind of belief that really began to take hold that there was this partisan politically motivated effort to suppress conservatives, despite the abundance of research, to the contrary, and lack of evidence.

It was almost like the lack of evidence was the evidence at some point, so much. Bye bye. Well, you can’t prove they’re not doing it. Well, here’s what we see. No, no, no, no. That, that research is biased. The wokes did it. Twitter did it. The old regime at Twitter did it. There was no universe in which that catechism was going to be untrue.

Right. And so it simply took hold and then they just looked for evidence to support it. And then all of a sudden they looked for evidence to run vast congressional [00:32:00] investigations into it. And it, it has always been a, smoke and mirrors, no, they’re there, but that doesn’t matter at this point because you have political operatives who are willing to, Support with their base by prosecuting this grievance that their base sincerely believes in because they’ve heard for Yeah,

Republicans have decided to completely boycott all information quality discussions

SHEFFIELD: well, and, and the thing that, and I can say this, having been a, a former conservative activist, that one of the things that made me leave that world was because I did, I was kind of, I mean, I worked in the, in the liberal media bias world saying that all the media is out to get Republicans.

And so, I, I started getting into this idea on social media. Well, are, are they biased against conservatives? And eventually I had the revolutionary and apparently subversive idea that, well, what if Republicans are just more wrong? What if our ideas are not as good?

Like, if we believe things that [00:33:00] aren’t true, then maybe they shouldn’t be promoted. And I’ll tell you when I started saying that to people, they They told me to stop talking about that. And even now, like, I mean, you, so when we should get into more specifically the things that happened to you, but before the right wing targeted the Stanford observatory and you yourself you were trying to have dialogue with.

People who were saying these things about, we’re being censored. The big tech is out to get us, et cetera. And you ask them, okay, well, so what kind of rubric do you want? How should we deal with this stuff? Obviously I assume you don’t think that. Telling people to buy bleach and drink it for to cure their toenail fungus or whatever.

They will say they don’t agree with that stuff. But then when you ask them, okay, well, so what should we do? We never got a response.

DIRESTA: No, there’s never really a good answer for that. I mean, one of the things that [00:34:00] long before joining Stanford, starting in 2015, I started writing about recommendation engines, right?

Because I thought Hey, the anti vaccine groups are not outside of the realm of acceptable political opinions. There’s no reason why it shouldn’t be on Facebook. There is something really weird about a recommender system proactively pushing it to people. And then when those same accounts, like I said, I joined a couple of these groups with a, totally different new account that had no past history of like my actual interests or behavior.

So at this point, clean slate account join those groups. Like I said, I got chemtrails, I got flat earth got nine 11 truther, but then I got pizza gate. Right. And then after I joined a couple of pizza gate groups, those groups sort of all morphed into QAnon. A lot of those groups became QAnon groups.

And so QAnon was getting pushed to this account again, very, very, very early on. And I just thought like, We’re in this really weird world. It became pretty clear, pretty quickly that, that QAnon was not [00:35:00] just another conspiracy theory group, right? That QAnon came with some very specific calls to act that QAnon accounts, like, sorry, QAnon people adherence is the word I’m looking for committed acts of violence or did weird things in the real world.

And you see Facebook classified as a dangerous org and begin to to, to boot it off the platform, but in the early days, that’s not happening. And I thought it’s, it’s weird when the nudge is coming to encourage people to join, as opposed to people proactively going and typing in the thing that they know they want to find and that they’re consciously going and looking for.

Like that’s two different behaviors. You sort of push versus pull, we can call it. And I did think, and I do think that as platforms serve as curators and recommenders, running an entire. Essentially social connection machine that is orienting people around interests and helping them find new interests does come with a set [00:36:00] of, ethical requirements.

And so I started writing about like, what might those be? Not even saying I had the answers, just saying like, is there, is there some framework, some rubric we can come up with by which we have that. In the phrase that eventually came to be associated with that, that idea was freedom of speech, not freedom of reach, right.

That you weren’t owed a megaphone. You weren’t owed algorithmic amplification. There was no obligation for a platform to take your group and proactively push it up to more people, but that in the interest of free expression, it should stay up on the platform. And you could go do the legwork of, growing it yourself if you wanted to.

So, that was the That was the idea, right? The question of like, how do you think curation should work? Like something is being up ranked or down ranked at any given time, whether that’s a feed ranking or a recommender system or even a trending algorithm. So what is the best way to do that? And, and I think that is still today the really interesting question about, [00:37:00] how to treat narratives on social media.

SHEFFIELD: Yeah. And it’s one that the right is not participating in at all. Not at all. And so, I mean, and that’s, and the reality is these decisions will be made whether they participate or not, like they have to be made. These are things that exist and these are businesses that have to be run and they will be made.

So, and it’s, to me, I thought, It’s, that’s been the most consistent pattern in how reactionary people have dealt with you over the years is that they don’t actually talk to you in a serious way. It’s easier to say like, oh,

DIRESTA: it’s all censorship, that, that’s a very effective, it’s very effective buzzwords, very effective term that you can redefine, censor, it was a nice epithet you can toss at your opponent, but it no, it misses the key question.

I think it’s worth pointing out by the way, that like every group at some point has [00:38:00] like felt that they have been censored or suppressed in some way. By a social media algorithm, right? It’s the right has made it the central grievance of a political platform, but you do see these allegations with regard to like social media is suppressing marginalized communities, right?

That is the thing that that you hear on the left and have heard on the left for a very long time. Social media during the October 7th, the, the day sort of immediately after October 7th in Israel, there was an entire report that came out. Alleging that pro Palestinian content was being suppressed.

Unfortunately, the methodology often involves asking people, Do you feel like you’ve been suppressed? And it’s, stupid. It’s a terrible, terrible mechanism for assessing these things. But but it, but it is, at least one way to. See where the pulse of the community is. People don’t trust social media platforms.

They’re, just not unreasonable, right? They’ve done some, some, some pretty terrible things. You do see, the platforms as they [00:39:00] sit there trying to figure out what to up rank or down rank the loss of like the, the lack of understanding and the lack of trust come, come into play.

Did you see God, it was yesterday. It was like libs of Tik TOK going on about how chat GPT was suppressing the Trump assassination because she was using a version that were the, that had been trained on material prior to the Trump assassination happening, right? And so it was such a ridiculous, like just this grievance, but oh my God, the engagement, the grievance gets.

And again, okay. People on the internet, they believe stupid things, but then, then Ted Cruz Amplifies it sitting congressional regulator, Congress, Senator Ted Cruz is out there amplifying this stupid grievance based on a complete misunderstanding of the technology she’s using. And, and, and he sees it as a win.

That’s why he’s doing it. And, and it’s like so [00:40:00] paralyzingly stupid actually, but you know, this is where we are.

SHEFFIELD: Yeah.

Douglas Mackey and what trolls do

SHEFFIELD: Well, and there’s a guy that you, you talk about in the book that I think a lot of people he kind of fell off the radar because he got arrested the guy who whose name is Douglas Mackey.

He went by Ricky Vaughn on the internet. on Twitter and he went on trial for creating a deception operation to tell black voters to not vote on election day. And in what was it? 2016. And yeah. And so he went on trial because that is a crime. And he. Got convicted of it and he got sentenced as well.

So, but in the, in the course of the trial some of the other trolls and, and it’s fair to say these people are neo Nazis that they, that’s who they worked with and Mackie was working with Weave, who is a notorious neo Nazi hacker and anyway, but one of the other trolls involved with this who went by the name microchip he said something that I thought was, was It’s [00:41:00] very frank description of what it is that they’re doing.

He said, my talent is to make things weird and strange. So there is controversy. And then they asked him, well, did you believe the things you said? And he said, no, and I didn’t care. And that’s, that’s the problem. Like, how can you have a shared reality with people who that’s their attitude? And I mean, you, you can get into that sort of toward the end, but I don’t know.

It’s, it’s, it’s a question that more people should think about.

DIRESTA: You should write a book about it.

It’s a, it’s a hard one. I get asked a lot of the time. In the, the stories that like the stories about me, right. The weird conspiracy theories that I’m like some, that, that the CIA placed me in my job at Stanford.

Man, I’ve had some real surreal conversations, including with like print media fact checkers. I got a phone call one day that was like, Hey, we’re doing this. You’re a supporting character. [00:42:00] You’re tangentially mentioned, but Hey, I need to ask the, the person is saying that. The CIA got you your job at, at Stanford.

And that seems a little bit crazy. I was like, it’s more than a little bit crazy. A little, little bit’s not quite it. It’s I didn’t even know that counts as defamatory because it sounds cool, but, but it’s fucking not true. And and you, and you find yourself in these, like these situations where, I was like, I’m actually, I’m, I’m frankly floored.

That I am being asked to prove that that is not true when, like, what, what did, what did you ask that guy? Did you ask him for some evidence? Did you ask him, like, what are you basing this on? Like, some online vibes, some shitposts from randos, and then you decide that, that this is enough for you to say the thing.

I am the one who has to prove the negative, like it’s the weirdest, like the, the, I felt like, I was actually really irritated by that, to be honest, I was just like, what kind of information environment are we in where where I’m being asked [00:43:00] to deny the most stupid, spurious allegations and nobody is asking the people pushing them for the evidence.

And that’s where I did start to feel like Over the last, year or so, like I’d sort of gone down this, this mirror world where, the allegation was was taken as fact and, the onus was on me to disprove it. And that’s just not a, it’s not a thing that, that you can do really.

That’s unfortunately the problem. So,

SHEFFIELD: yeah.

How Elon Musk and Jim Jordan smeared anti-disinformation researchers

SHEFFIELD: Well, and and that certainly snowballed eventually. So they, they. These bad faith actors went from ignoring your questions about moderation standards and epistemology and to starting to attack you and other people who study disinformation and misinformation.

And you, you and others were figures in the Twitter files. Yeah. Elon Musk and his I guess now former friends mostly seems like [00:44:00] but what, what was the, I guess maybe do a little brief overview of that. And

DIRESTA: yeah, so we had done a bunch of work yeah, public work. It was all over the internet in 2020 looking at.

What we called misinformation at the time, but became pretty clear that it was like election rumors, right? It was people who were trying to de legitimize the election preemptively. And we had a very narrow scope. We were looking at things that, as like, Mackey’s things about vote on Wednesday, not on Tuesday kind of stuff.

So we were looking for content that was trying to interfere in the process of voting or content that was trying to de legitimize voting. And so we did this very complicated comprehensive. Project over the course of about August to November of 2020, tracking these rumors as they emerged with a bunch of students working on the project, really very student driven.

We connected with tech platforms every now and then to say things like, Hey, here’s a viral rumor. It seems to violate your policies, have at it, do whatever it is you’re going to do. We connected with state and local [00:45:00] election officials occasionally, mostly to say things like they would reach out sometimes they would we called it filing a ticket.

They would sort of file a ticket. And they would ask about content that they saw that was wrong. Right. And so that was stuff like Hey, we’ve got this person, this account, it’s claiming to be a poll worker. We have no record that anybody with that name is a poll worker, but it’s saying a lot of stuff that’s just wrong.

And we’re worried that it’s going to undermine confidence in the vote, like in that district. So that was the kind of thing that we would look at. And then we would send back a note sometimes saying like, here’s what you should do with this. Here’s what you should do with that. And the so that was the, the process that.

That we went through with all these rumors. So, and then in 2021, we did the same kind of thing, but with vaccine rumors. And in that particular case, obviously it wasn’t state and local election officials. We would just track the most viral narratives related to the vaccine for that week. Published it PDFs, every PDF once a week on the website, completely public.

Anybody could see them. And that was, and then we would send them to people who’d signed up for our mailing [00:46:00] list, and that included public health officials some folks in government, anybody who wanted to receive that briefing, which was just a repurposing of what was on the website. So those were our projects.

And they were refashioned by the same people who tried to vote not to certify the election or tried to overturn the election. And some of the kind of right wing COVID influencers. As they were reframed as not academic research projects, but as part of a vast plot to suppress all of those narratives, to take down and delete all of those tweets, to silence conservative voices.

And as this rumor about our work snowballed, it eventually reached the point where, somebody went on Tucker Carlson to say that we had actually Stolen the 2020 election, that this was how we had done it. We had suppressed all of the true facts about voter fraud and all the other things. And as a result, people hadn’t voted for Donald Trump.

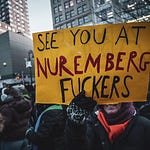

So it was just complete surreal nonsense. But again, [00:47:00] having rumors about you on the internet is an inconvenience. It’s an annoyance. You get death threats, people send you crazy emails. But what happened that was really troubling was that sitting members of Congress took up the cause and people who had subpoena power then used nothing more than, Online lies about our work to demand access to our emails.

So the way that this connects to the Twitter files is one of these individuals, a right wing blogger who created this website that he called the foundation for freedom online. It was basically just him began to write these stories about us. And then he aggressively repeatedly over a period of almost two months, tried to get Matt tidy to pay attention to him.

And eventually, in March of 2023, he connects with Tybee, who’s been doing several of these Twitter files, in a chat room in a, in a, sorry, in a Twitter spaces kind of voice chat. And he tells him, I have the keys to the kingdom. I’m going to tell you about that CIA [00:48:00] woman who works at Stanford who has FBI level access to Twitter’s internal systems.

And now these are words that mean nothing. Like what the hell does FBI level access mean? And I never had access to any internal system at Twitter, but again, it doesn’t matter because he peaks Tybee’s interest, connects with him. And then all of a sudden, Jim Jordan is requesting that Tybee come and testify about the Twitter files.

And rather than testifying about the things that are actually in the Twitter files, he and Michael Schellenberger, who’s the co witness, start to just regurgitate the claims about the mass suppression of conservative speech that this individual has written on his blog. So there’s no evidence that is actually offered, but in response to the allegation being made, Jim Jordan then demands all of our emails.

And so all of a sudden, Matt Tybee and Michael Schellenberger say some stuff on Twitter and Jim Jordan gets to read my emails. And that’s really all it takes. And that was the part where I was like, wow, we are really I maybe rather naively [00:49:00] thought that, There was like more evidence required to kick off a massive congressional investigation, but that’s not how it works today.

SHEFFIELD: Well, and, and, and ironically, it was named by the done by the House Committee on Government Weaponization. That turned into nothing but weaponizing government. Yeah. I

DIRESTA: mean, the whole thing is I mean, honestly, it should be kind of depressing to anybody who you, when you, when you realize that it’s really just norms that are keeping the wheels on the bus.

And that when you move into this environment where the end justifies the means that there is this, Very little in the way of, of, guardrails on this sort of stuff, except, basic decency and and or more and more Machiavellian terms, like a sense that this is going to come back and bite them, but it’s not, it’s not going to at all.

Nothing is ever going to happen either to the people who lied or to the bloggers or to the Twitter files guys, or to the members of Congress who [00:50:00] weaponized the government to target the first amendment protected. Research of random academics. Nothing is going to happen to any of them. There will be no consequences.

And that’s when you start to realize that there’s really very little that is we, we’ve, we’ve created a terrible system of incentives here. Yeah.

SHEFFIELD: Yeah. And and what’s, what’s awful is. I think that even now after what happened, so I mean, we need to say that, after all the, the legal threats and there were other ones as well the Missouri versus Biden case and some of the other ones that targeted the Stanford Internet Observatory.

I mean, the goal was to shut

DIRESTA: the

SHEFFIELD: program down and they succeeded.

And yet I don’t, I feel like that generally speaking, people outside of the people who directly work in this area. I don’t think that there is any concern about [00:51:00] this problem at all, or

DIRESTA: this is where what I’ve tried to do, like, I’ll be fine.

I’m not worried about, like, me. But,

Conspiracy theories don’t have to make sense because the goal is to create doubt

DIRESTA: One of the things that one of the people that I spoke with when it all began to happen, when the first kind of congressional letters showed up were some of the climate scientists like Michael Mann, I referenced in the book who had been through this with his own sort of fight in the pre internet days of 2012.

But again, when he had done research that was politically inexpedient, right, showing that that, the sort of hockey stick graphs. Around around climate change and kind of human human impacted climate change. And he wound up getting hauled in front of congressional hearings, having his email gone through, a whole bunch of different things happened to him.

And one thing I was struck by was how familiar the playbook was. Right. And I read Naomi book, merchants of doubt. And she also goes into it with regard to [00:52:00] scientists who are trying to say, Hey, it looks like tobacco is really not great. It looks like it might cause cancer, and this sort of retaliation.

And and, and what you see from the companies where they say, like, we just have to discredit the people who are saying this, right. We don’t have to offer, we don’t even have to bolster our facts. We just have to attack these people that that’s, what’s going to work, right. Smearing them is the most effective thing to do here.

We just have to create doubt, a lack of confidence in what they’ve done and said. We have to turn them into enemies. And you see that model of this smear working very, very well whenever there is something that is politically inexpedient. And again, the people who were quote unquote investigating us were the people who were very angry that we had done this very comprehensive kind of, A research project tracking the big lie and the people who propagated the big lie were the ones who were mad at it.

And once they had gavels, they retaliated. That’s how it works. The the problem is that playbook is very effective. It’s very [00:53:00] hard to respond to rumors and smear campaigns. Institutions are notoriously bad at it. And so the question then becomes like, which field gets attacked next? And that’s where, what I think like the focus shouldn’t be on us or SIO or, any one institution that’s experiencing this, it should be on how effective that playbook is.

And the thing that we need to see is people doing more work on countering the effects of that playbook on pushing back on smears on fighting much more aggressively. When Congress comes calling in this way and that, that I think is the the, the key takeaway from like, from my cautionary tale.

The desperate need for a “pro-reality” coalition of philanthropists and activists

SHEFFIELD: Yeah. There’s just so much that needs to be done and, and there’s so much money that is being out there to promote falsehoods to the public. Because the issue is that for, reactionary media, reactionary foundations, organizations, they don’t engage in general interest.[00:54:00]

Like public philanthropy. So in other words, they’re not out there, feeding the homeless from their foundation or, helping people register to vote or, various things like that. Or, funding cleanup projects or, or something, they’re, they’re not doing that.

And so we’re, and so all of their money is focused on altering politics. Whereas, The people who are, the non reactionary majority, the philanthropists, they have to support all these other institutions, like the Red Cross or, all these things that are not political. And so the philanthropy is, is split, but it’s also missed.

Like, they don’t understand that you have to actually create things that are In favor of reality and anti the, the, the, because like, basically what we’re, emergence of a, of an anti epistemology

DIRESTA: and.

SHEFFIELD: And to me, the, the analogy that I use sometimes with people is HG [00:55:00] Wells his novel, the time machine.

And at some point in the future, the humans in that area had speciated into two groups and there were the, the uh, Eloy that lived uh, um, during the daytime and, you know, they had solved all problems for themselves and scarcity and whatnot. But they were totally unaware that there was this other Group of post humans who lived at night and preyed on them.

And like, I feel like there’s so many knowledge workers and institutions. They don’t realize that the, that, we’re living in an information economy where there are predators.

DIRESTA: It’s a good metaphor for it. Yeah.

SHEFFIELD: And they’ve got to, they’ve got to wake up to that because everything that they do is actually under attack.

If, if not now, it will be later. Everything has a liberal bias in this worldview and no matter who it is. Like, I mean, it started with. Then it went to journalism. [00:56:00] Then it went to, now it’s with the FBI is liberally biased and agency created by a far right Republican J Edgar Hoover and never run by anyone who was a Democrat.

registered Democrat, but it’s liberally biased. It’s liberally biased. And police are that now the woke military, like everything is liberally biased. And like, until you realize there are people out there that want to completely tear down all institutions. then you’re, you’re not going to win. I don’t, I don’t know.

It’s kind of depressing.

DIRESTA: I mean, that was the the one thing I wish I’d been more direct on in the book was, was actually that point, right? It’s the uh, the need to understand that that it, it, it comes for people and that. You don’t have to do anything wrong in order for it to happen. I think, I think there’s still a, you know, even like my parents, I was like, Oh, I got a congressional subpoena and [00:57:00] Stephen Miller sued me.

And they’re like, what did you do? And I was like, no, that’s not how this works. Because, because they, they live in a time when. Or they, their, their model of politics is still this, obviously if you’re being investigated, like there’s some cause for it and, and, and I’ll confess also that I, I really did not realize how much things like lawsuits and everything else were just.

Stuff that you could just file and that you could just, gum up a person’s life with meaningless requests and all these different procedural, all the procedural drama that went along with it. And, I, we found out that Stephen Miller had sued us when Breitbart tweeted it at us.

And I remember seeing that and being like, is this even real? It don’t, doesn’t somebody show up to your, to your door with papers or something? And my, my Twitter sued, like, how does this work? But they were doing it because they were [00:58:00] fundraising off of it. Right. The the, the groups that were part and parcel to the lawsuit.

And I thought, Oh, I get it now. Oh, that’s so interesting. I always thought that. The, the legal system was maybe biased in some ways around some types of cases, you read the stats about criminal prosecutions and things, but I’d never paid that close attention. It wasn’t really a thing that that I followed and and then all this started and I thought like, Oh boy, wow, there’s really it’s really a whole lot of interesting things about this that I had no idea about, and I guess I’m going to get an education pretty quickly.

Procter and Gamble, one of the earliest victims of disinformation

SHEFFIELD: Uh, yeah, well, but at the end of the book, you do talk about some things that have worked in the past. And I mean, and it is the case, I mean, unfortunately the legal system has been badly corrupted by politicized and ideological judges. But nonetheless like you talk about one instance that I, that is kind of entertaining about Procter and Gamble.

DIRESTA: Oh yeah.

SHEFFIELD: Conspiracy theory in the 80s against them why don’t you tell us about that?

DIRESTA: [00:59:00] Yeah, this was one of these satanic panic type things. There’s a allegation that their logo which was a man with sort of stars in his beard had six, six, sixes in the curly cues and was evidence of some sort of satanic involvement.

And what’s interesting about Rumors, which is just these, unverified information. People feel very compelled to share it. It’s interesting. It’s salacious. And what you see is this rumor that they’re somehow connected to Satanism begins to take off. And the challenge for them is like how to respond to it.

And this was, it’s actually not the most uplifting story because what you see them do is they try to put out. Fact checks, right? They try to explain where the artwork came from. They are looping in they loop in some prominent evangelicals of the time, trying to get them to be the messenger saying, no, this is, this is not real.

It comes out that actually Was it I think it was Amway, right. That one of their competitors was behind this in some way was, was actually trying to promote, it was trying to, it [01:00:00] was sending out these, these rumors was like giving it to their membership, calling people and these sorts of things they wind up suing, they wind up suing the, the people who were spreading the rumors but ultimately they do eventually abandon the logo, which is the, the sort of depressing part of the story for me which is that it speaks to, if you don’t deny it, It’s, it doesn’t go away, right?

The rumor continues to, to sort of ossify and spread. If you do deny it, people aren’t necessarily going to believe you. You try to bring in your various advocates who can speak on your behalf. You aggressively sue the people who you know, who, who it turns out are, are doing this to you. And we’ve actually seen that happen more, right?

Some of the election rumors dominion, of course, one that that settlement from Fox news, I think trying to, I don’t remember. I think smart Maddox yet to be decided. But you have this this challenge of how do you respond? And yeah, I, I kind of wish they’d stuck with the logo, but I don’t know that that was the, one of these sort of canonical case studies and the challenge of responding to modern rumors.[01:01:00]

The covid lab leak hypothesis and how content moderation can be excessive

SHEFFIELD: Yeah. Well, and I mean, one of the things you do talk about also a little bit is understanding that These rumors and, falsehoods that are circulated in many cases, they are based on real beliefs that are not related or real concerns that are maybe even legitimate concerns and that trying to just immediately cut off those topics from discussion, that’s not, that’s not going to be effective either because, and I think probably the best example of that is the.

Is the belief that the, the SARS CoV 2 virus, was created in a, in a weapons laboratory hypothesis, like it wasn’t like when I first heard that I was like, oh, that I don’t, I would love to see the evidence for that. Like, that was my reaction for it. Yeah,

DIRESTA: I didn’t, I didn’t think it was that outrageous a claim.

Right. I so the first, the first. I wrote an [01:02:00] article about this. The bio weapon, right? That it was a bio weapon was part and parcel. Like these two things emerged almost simultaneously. And I wrote about the bio weapon allegation because China made bio weapons allegations about the U. S. Even as this was happening.

So I was talking about it as this, like this sort of interesting great power propaganda battle that was that was unfolding. And what was interesting was that the Chinese To support their allegation, we’re not picking from American loons, right? They were grabbing these, like these American lunatics who were like upset about Fort Detrick.

Those were the people that China was pointing to saying, look, some people on the internet, some Americans on the internet are saying that COVID is a bioweapon created by the United States. And that was where they went with it. And I thought it was very interesting that our. Kind of online conspiracy theorists were split between like who had created the bioweapon.

This is, this is very common. I find in conspiracy theories today. like superposition, you’re [01:03:00] like waiting for the observable thing to like collapse reality down into one state, but you have these, these sort of two conflicting explanations growing simultaneously until all of a sudden everybody forgets about one.

So we do move past the idea that the U S created it and we zero in then on the On the, that China created it. And what’s interesting though, is that the lab leak doesn’t require the bioweapon component. The lab leak is the accidental release. And that I was like, okay, yeah. So it’s in the realm of the plausible, but it gets caught up in social media moderation policy.

And I think that there was a huge own goal in my opinion, because I think if you are making arguments. For what kind of content should be throttled or taken down right when you, when you go through the rubric of like how the world should be moderated, if you’re making an argument that something should be throttled or taken down, particularly taken down, there’s a massive backfire effect to [01:04:00] doing it.

And so the only time I think it’s justified is if there’s some really clear material harm that comes from the information being out there or viral or promoted. And so I do think something like the false cures can be done. can rise, to that level at certain times, but the lab leak hypothesis, like it was really hard to, to find a thread in which it was overtly directly harmful in a way that a lot of the other COVID narratives were.

And so, I felt like it, it undermined like legitimacy. In content moderation by being something of an overreach with no discernible justification. And I, that was my I think I write this in the book, right? I just thought it was one of these things, it’s not like the others, as you look at this very long list of policies and, and and rubrics.

And for that one to be in there, I think it was like, Marginally grouped in there under the allegation that saying it was a lab leak [01:05:00] was racist. And even that, I was like, I don’t know. I’m pretty center left, like , I’m not seeing it. Like where’s the, where, where are we getting this from? So that, that one I thought was

Well, or

SHEFFIELD: I mean, yeah. Or they, it was an inability to understand it. Yes, you can use that in a racist way. But the idea itself is not implicitly racist it’s just not, and, and, and they did, and Twitter did the same kind of overreach with regard to Hunter Biden’s laptop. Now I should say that I personally was involved in the back end of that, along with other reporter friends of mine that

DIRESTA: we were

SHEFFIELD: all trying to get that, that data when they started talking about it.

Rudy’s people wouldn’t give it to anyone. Really? That’s

DIRESTA: interesting. I heard that Fox, like hadn’t Fox turned it down? That was the story that I heard about that one.

SHEFFIELD: They did also. Yeah. They refused to run it. And and then when ran a story about it. [01:06:00] The evidence that they offered for it was nonsense.

It was a reassembled screenshot of a, of a, a tweet. Like, that’s basically what it was. And like, they didn’t, in other words, there was no, there was no metadata that was provided. There was nothing, there was no source of information. Of anything. It was literally just screenshots of iMessage, which anyone can make those.

Like that’s not proof of anything. And the fact that Rudy was vouching for it meant probably less than zero.

DIRESTA: We didn’t work on that. I mean, it’s funny because like what, like I said, the election integrity partnership, the work that we did was looking at. Things related to voting and things related to de legitimization.

And that was out of scope on all of it. So my one comment on that, my one public comment on that was actually in a conversation with Barry, with Barry Weiss. When I said as the moderation decision was unfolding, I said, I think it’s, I think it’s real overreach here. I don’t think this is the right call.

It’s [01:07:00] already out there. It’s a New York Post. Article at this point, it’s not the hacked materials policy by all means, the nonconsensual nudes, like moderate those away, like a hundred percent. That is not a thing that nobody opts into that by being the child of a presidential candidate. That was, I think completely the right call was to, to remove the nudes as they begin to, to, to make their way out.

But the article itself, I said, okay, this is really an overreach. What Facebook did was they temporarily throttled it. While they tried to get some corroboration of what it was, and then they let it, they let it go. Right. And that is, I think, a reasonable call compared to to where Twitter went with it.

But I mean, these are the, what you see in ironically the Twitter files about this is you see the people in Twitter trying to make this decision with incomplete information. And they’re, they’re not out there saying like, man, we really need to suppress this because it will help Biden or we really need to suppress this because like, F Donald Trump, you just see them trying to, trying to make this call in a, very sort [01:08:00] of human way.

So the whole thing, I thought the Twitter files, the one thing that I thought was really funny about it was the way it tied into the Twitter files was So nothing to do with us again, nothing. They decided that like Aspen Institute had held this kind of threat casting, what if there is another hack and leak in the 2020 election thinking about the hack and leak that had happened in 2016, right?

The Clinton emails on the The Podesta emails and the DNC, DNC’s emails. So they were like, okay, so this is, one of the scenarios they come up with is that there is some hack and leak related to Hunter Biden and Burisma. And that was the scenario that they come up with. So this then comes to figure into the, the sort of conspiracy theory universe is like, Oh, they were pre bunking it.

They knew this was going to happen. And the FBI in cahoots with Aspen in cahoots with Twitter tried to. Tried to get them to do this. And that was the that was the [01:09:00] narrative that they, that they ran with. And I thought, I wasn’t at that round table, but that, that tabletop exercise, but like scenario planning is, it’s a pretty common thing, right.

Most political groups, Game out various scenarios. It was just sort of weird to me that that that became the, that there was like this, they had to come up with some cause to justify Twitter’s moderation decision instead of just reading what Twitter itself said in the moment, which is like people trying to figure out what to do and really not knowing.

So come up with a whole conspiracy theory about it.

SHEFFIELD: Yeah. And. And, and, and I guess we, we, we should say that, if it, if they had just let it go the way, not done anything to it, that it wouldn’t have been that big of a deal, but like now there’s this giant mythology built around it that, the Hunter Biden laptop story was suppressed.

And that is the reason that Joe Biden won in 2020. And it’s like, No one voted for Hunter Biden. [01:10:00] And like the idea of, presidential relatives who are screw ups, like that’s Super common. Well, that’s just, that is a thing in and of itself. You’re not voting for the relatives, but yeah, it just, so it’s again, like these are not, they’re not good faith arguments that we’re, that they’re making here.

And I think we should point that out even as we do criticize Twitter for doing the wrong thing.

DIRESTA: That’s a good point. And I I don’t know what, this is one of these things where Maybe, there’s been bad faith politics before, but as I was going through, like, historical research and stuff, trying to figure out how do people respond to rumors?

How do they respond to smears? That question of, like, what do you do with the bad faith attacks is one that I don’t think I’ve seen a whole lot of Like everybody is very good at documenting that they are bad faith, documenting the rumor, documenting who spreads it and how and all that other stuff.

But that response [01:11:00] piece is what is still missing that sort of calm strategy. Like, how do you deal with this? Cause I know I feel when I get asked about. These, these crazy, Twitter files, bullshit claims about the CIA placing me in my job. I’m like, is it even worth explaining the grain of truth underlying the fact that I once worked there 20 years ago?

Or, because it, it feels so pointless when. I wonder sometimes if the appropriate responses, that’s bad faith bullshit. And I’m just not going to deal with it again. If you want to read about it, go read it on the internet. I actually really don’t know at this point what the what the appropriate response strategy is.

I know people think that, that, that we should know. I know we tell election officials and public health officials and things like respond quickly, get the facts out there, but that. That doesn’t necessarily. Diminish or respond to the bad faith attack. It’s simply putting out alternate information and hoping that people find it compelling.

So. [01:12:00]

SHEFFIELD: Yeah, no, it is a, it’s a problem. And I mean, it’s honestly, I, I feel like the solution part, even though you do talk a little bit about it in the book that that’s probably its own. Yeah,

DIRESTA: I think so too.

SHEFFIELD: Probably

DIRESTA: that’s the next book I’ll go reach out to all the people who’ve been smeared and no, I think about remember the book, so you’ve been publicly shamed.

That’s sort of like, gosh, when did that come out? That was that was when, remember, I think it was Justine Sacco’s her name, right? She made that AIDS joke on the plane, got off the plane and the entire world had been as Justine landed yet. That was one where I think it gets at this question of what do you do when there’s a mass attention mob, right?

That’s sort of online mobbing. But that question of how do you respond to bad faith attacks? I think is of the, one of the main ones for our politics right now. Yeah.

SHEFFIELD: It is. Yeah. And yeah, getting people to realize that Alex Jones was right about one thing. There is an [01:13:00] information war and only one side has been fighting it though.

JD Vance couch joke illustrates real differences between left and right political ecosystems

DIRESTA: And so, yeah, anyway, but we, uh. we started a whole conversation about Kamala and the coconuts on the couch. I know we’re like coming up on the, on the hour here, but the sort of, I, I’ve been. No. Really entertained. I have to say by, by watching that whole the the sort of instantaneous vibe shift, the JD Vance is weird as the message, not even, we’re going to go through his policies and fight them point by point, no, just like the whole thing is weird, that’s it just diminish it, brush it off, ridicule it.

Maybe that’s the Maybe that’s the answer. I’ve been watching again, not because of any particular interest in, the candidacy as such, but the sort of meta question of the, the mechanics of the messaging in the race, I think are really interesting.

SHEFFIELD: Yeah, well, and yeah, the couch rumor I think is it has been illustrative of how different that each side [01:14:00] of the spectrum handles things that are jokes or memes that, on the right, they believe them.

If there’s, I mean, gosh, there’s. Just so many examples of that. I mean, there was never any evidence that Barack Obama was born. Nobody ever, they didn’t even, like, I never even heard anybody talk to Kenyans, administrative, they didn’t even bother to provide evidence for it. And yet, They believed it.

And, and then like the thing with JD Vance and, and his alleged proclivities for intercourse, intersectional in course, intercourse that, people on the, on the political left, they knew it wasn’t true and they said it wasn’t true, but they were just like, But we’re, we’re going to, but we’re, it’s fun and we’re going to talk about it because it fits within a larger point and this guy is a very strange individual and and like that it is, it is a flipping of the script that has just been [01:15:00] enraging because, I mean, and I think Jesse waters the, the Fox host.

And actually, I guess I’ll play the, let me see if I can get the clip here so I can roll it in the show here. So yeah, like Jesse Waters, the Fox News Channel host, he was, he was outraged, almost like on the verge of tears, frankly on his program this week as we’re recording here, I’m going to roll it.